At the CloudStack Collaboration Conference 2014 in Denver I was given the honour to present the opening keynote on the last day. Of course you stare at a number of people that are suffering from hangovers, but that is a small price to pay.

Selecting a topic for a presentation is always a burden. For this talk I focussed on the position of Open Source in the boardroom. Yes over the last decade we have seen an uprise of Open Source software that is used in corporates, enterprises and even the public sector. But at the same moment, Oracle, CA, BMC still have turnover numbers that make you dazzle. And when you take into account that software plays a bigger role than ever (Sofware is going to eat the world remember) this is still remarkable.

Selecting a topic for a presentation is always a burden. For this talk I focussed on the position of Open Source in the boardroom. Yes over the last decade we have seen an uprise of Open Source software that is used in corporates, enterprises and even the public sector. But at the same moment, Oracle, CA, BMC still have turnover numbers that make you dazzle. And when you take into account that software plays a bigger role than ever (Sofware is going to eat the world remember) this is still remarkable.

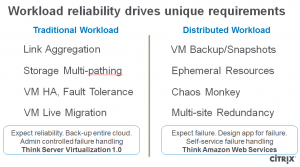

Especially when you know that the CIO nowadays does not want to have vendor lock-in, and that in the large application landscaped items like datacenter consolidation, application landscape normalisation and the move to the Cloud (hybrid) requires his attention with a strong focus on Cost and Flexibility (While keeping availability at 100%).

One might think that these trends are reason enough for the typical CIO to have a stronger urge to move parts of his workload to Open Source. The contrary is true unfortunately. Last month I have witnessed this a couple of times.

At the Pre Commercial Procurement Market Consultation held by the EU for their Cloud For Europe initiative the companies that attended the meeting all were commercial parties. Not one representative of Open Source software was part of the discussion. Of course my company was present and we asked some questions about the position of Open Source in this process.

Later that week Allen & Overy and Black Duck Software organised a early morning seminar at the Dutch office of Allen & Overy. The theme was open source. The presenters gave a very good overview of the status of Open Source software and the legal implications of it. Whether it is from a merger and acquisition point of view or from a risk management perspective. My main take away was that few companies have an Open Source policy in place. They do not know how wether the software that is written in their companies, or software that is bought by the companies contains Open Source. And the numbers do not lie. Approx. 30% of all code written is Open Source code. (more info from Black Duck on OSS)

The fact that Purchasing Departments, CIOs, Enterprise Architects are not always in the know is of course stunning. But this fact is not their issue alone. It is a big issue for the Open Source software industry itself. Open Source Software is missing legal bodies that can act on their behalf. e.g. CloudStack, the software that powers clouds has a lot of traction in the enterprise. Loads of companies use this product to build public or hybrid clouds, and yet the community that support and build CloudStack consists of mainly developers. Of course CloudStack is backed by Citrix and they continue to do a good job promoting that their CloudPlatform product is powered by Open Source. The Big players here have stronger presence in the boardroom, have more access to analysts and of course bigger marketing budgets.

Some companies have developed a business model to make optimal use of both an Open Source community and their developers and a commercial model. Chef and Puppet are good examples. For additional features, security or an on premise installation you have to buy the commercial versions of their products. These companies use open source to gain traction and preserve a good culture in their organisation. Their commercial model ties into this nicely.

I am not saying that this can be changed easily, but for starters I will organise an Golf Event for both CIOs and Open Source people to get acquainted. Found some cool people already that are willing to participate. If you want to join, let me know and you will get an invite.