Yesterday (7-nov) I was given the opportunity to speak at the cloud expo by Shannon Williams (VP Market Development, Cloud Platforms). As you probably have read in my previous posts, our cloud is ready to rumble. Hence, the perfect timing to share some of the learning money we paid over the last year. In this blog post, I’ll write a short summary. Reason I do so is that a lot of people asked me questions during and after the presentation. That is giving me the feeling that our experience may be off value for others out there as well. For starters, the assignment we as a team came up with was, design a cloud. A cloud that is capable of running traditional workloads. Workloads we often see at our enterprise customers. Workloads that rely on a solid redundant infrastructure. Next to that we do want to embrace the new as well. So we need to design for failure and resilience. We need to design for the modern type of (web)applications. Or the distributed workload as Citrix calls it.

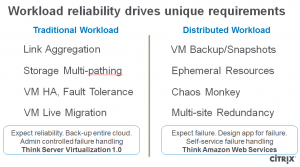

For starters, the assignment we as a team came up with was, design a cloud. A cloud that is capable of running traditional workloads. Workloads we often see at our enterprise customers. Workloads that rely on a solid redundant infrastructure. Next to that we do want to embrace the new as well. So we need to design for failure and resilience. We need to design for the modern type of (web)applications. Or the distributed workload as Citrix calls it.

To have the ultimate R&D effect, we told ourselves, not to use any technique or piece of equipment we have ever used before. Only when we encounter major issues or slowdown of delivery we would fall back to the stuff we master already for years. I wont go into detail here about why we need this cloud way of working and such. If you do want to know, just contact me and I am more than happy to tell you all about it.

To have the ultimate R&D effect, we told ourselves, not to use any technique or piece of equipment we have ever used before. Only when we encounter major issues or slowdown of delivery we would fall back to the stuff we master already for years. I wont go into detail here about why we need this cloud way of working and such. If you do want to know, just contact me and I am more than happy to tell you all about it.

the choices so far:

- Storage. Distributed workloads are not in need of sync storage. Having that said, it needs to have a very good level of reliability. After all we are building for Schuberg Philis. The short list here was Netapp (one of our long term partners), Gluster and ZFS on commodity hardware (Nexenta). We found that Nexenta delivers us the biggest bang for the buck. Although we see that there service and commercial infrastructure is not as long as around as that of our current partners. Nevertheless they are a real partner to us and more than willing to move forward aggressively on those issues. If you ask me, they are going to be a real threat to the ‘classic’ storage providers. For the traditional workload we would not take the risk of the fancy new stuff. We played with the idea for a while to build our own Nexenta Metro Cluster, but for reliability and speed reasons we have chosen to stick to a regular Netapp Metro Cluster. Those cluster we operate and implement already for a long time. On the S3 part of the spectrum, we currently do not offer / build anything. As S3 api is supported as of Apache Cloudstack 4, we have multiple options there in the future. Could be Cloudian, could be something else.

- Switching. Shootout between Cisco and Arista. Same trade of here as well. Enterprise class for traditional workloads vs the low latency and cheaper switching for distributed workloads. As the risk here is much lower than on the storage level, and we have a strong wish to do a lot of Software Defined Networking (sdn), the choice was not that difficult. Arista all the way. Also for traditional workloads. Having that said we still keep an eye on Cisco of course. They came with a very competitive deal, and we all know and love their high quality devices.

- Compute. Back to what we know over here. Only difference we make is the G8 series and not the G7. It has led to ILO, driver, firmware issues as always, but those are items that we can conquer.

- Hypervisor. Trade offs here are mainly around price and features. If your cloud will be a big one. Now or in the future, you might want to consider an open source hypervisor. KVM and Xen are the obvious candidates. If you want feature richness and a single vendor strategy ESX is your best bet. For us, this is a really important item. We do not want to be locked at one hypervisor. We do want to work with something cheaper than ESX, but we do not want to spent cazillion hours on troubleshooting and learning a new virtualization layer. The safe bet for us was to go with the Xen server for starters. The commercial version. Not that we do not like the open source one, but in those early days of our clouds support might come in handy. Xenserver advanced it is. In our traditional environments btw a lot of ESX. On the wish lists is definately some KVM as well. Talking with Citrix on their view on this they lifted a small piece of the cutrain (is that proper English?). The cloud layer will talk to the hypervisor as integrated as possible, but the hypervisor will need to deliver the features! if you need to pick, go back to the virtualization requirements you have, check your wallet and make your pick.

- Software Defined Networking. A pretty new ballgame in the world. But if you look at the possibilities, they are almost endless. One of our lead network engineers ran into Nicira and he was sold. We contacted the guys and we were all very impressed. What Martin Casado and his team have accomplished in such a short timeframe is really amazing. This SDN gives us the possibility to really make hybrid clouds, to set up tunnels within DTAP environments etc.. After a short POC we new that this is amazing stuff.

- Cloud Orchestration. The long list we used was OpenNebula (http://opennebula.org/), Eucalyptus (http://www.eucalyptus.com/eucalyptus-cloud), OpenStack (http://www.openstack.org/) and Cloudstack (http://incubator.apache.org/cloudstack/), and Vcloud (http://www.vmware.com/products/datacenter-virtualization/vcloud-suite/overview.html). Again Vcloud was not the first option for us. Do not get me wrong. Vcloud is here to stay. We are an ESX shop and if needed we will absolutely build one of those clouds. But for now this was too close to home. We needed to learn the open source community and we needed a product that is potentially free to use. Having that said, we created a shoot out between OpenStack and Cloudstack. Requirements next to the newness and the license model are, the openness of the system. The ability to contribute ourselves to the community and the amount of time needed by our engineers to install, modify, tweak and tune the system. Based on those requirements CloudStack became the preferred tool. In the beginning we were still worried big time because the move to the Apache Software Foundation was not announced. When that was the case the end result of the PoC was obvious. Spark404 could contribute our items directly to the core team. The community is exploding as we speak. So many features are built in in a very fast pace. Our engineers needed little time to learn CloudStack. Way faster than we did with OpenStack and the engineering efffort needed with OpenStack was bigger than we expected. Having that said, the richness of the OpenStack suite is very promising. If they get their release cycle straightened out, it is obvious that they can be a massive player in this field.

- Configuration Management. CFengine3, Chef or Puppet? We as addicts to the CFEngine2 product are in need of a proper config management tool. Upgrading to version 3 of CFE was in reach and so was Chef. A number of new Mission Critical Engineers used Chef in their previous lives. We handed both options to the team. Before we knew it, the first recipes and cookbooks were being created. A tough discussion internally later (or maybe 5 discussions) we voted with our feet. The team uses Chef and they are happy campers doing so. The community of OpsCode is great and with trainers as Mandi Walls, we trained approx. 35 ppl in only two sessions. Having that said, we have had great conversations with Mark Burgess on this topic. The Theory of Promises sounds like a novel. One that you at least need to read once in your live. Nevertheless momentum was in favor of Chef, and that is what we use for our Mission Critical Cloud.

Some questions that were asked to me during and after the talk:

Q: You talked about two datacenters not far away, so you can use stretched Vlans. What is the max distance you use? A: Of course the distance is measured in milliseconds (or less). In our situation this is always less than 50km.

Q: If you would have to make the OpenStack / CloudStack decision again. Would it have the same outcome? A: Most probably yes. We still do not have the manpower to glue the OpenStack components together. And the release cycle is still not so clear to us. Having that said, we have picked CloudStack 10 months ago. It could be that my info on OpenStack is a bit outdated, because we are not really following their moves in detail. Could be that they made progression in those areas.

Q: Did you know about or talk to companies that use Nexenta beforehand? A: Yes, we have talked to more than one company. One university and one hosting provides in France. They had similar experiences with the product although one of them faced performance issues. We figured we could corner this issue rapidly, so we went for Nexenta.

https://speakerdeck.com/schubergphilis/cloud-computing-expo-2012